Meta announces SAM 3 and SAM 3D. SAM 3 and SAM 3D are designed to bring a sharper real-world understanding to AI, with possible impact on video editing, AR, and other creative tools.

Meta has officially released SAM 3 and SAM 3D, advancing its Segment Anything initiative with improved multimodal capabilities. Available via the new Segment Anything Playground, the models strengthen the link between language and visual interpretation, providing an upgraded toolkit for developers, researchers, and creative professionals working with segmentation and image understanding.

Basically, this update shows that Meta is serious about building the next generation of creative tools and making AI better at understanding and interacting with the real world.

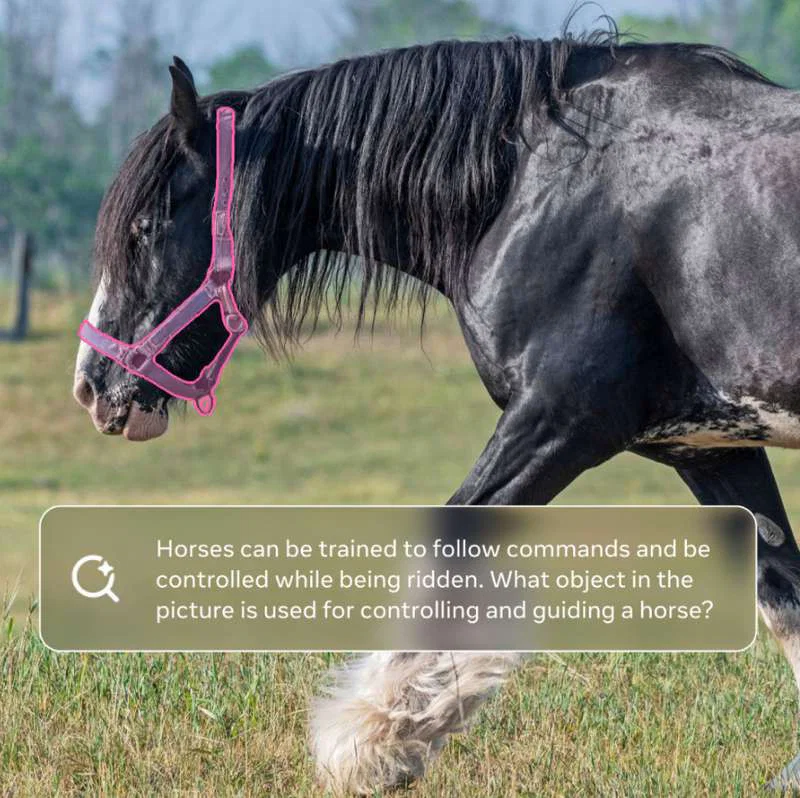

SAM 3 Understands Complex Text Prompts

While previous SAM models could isolate objects based on visual cues like a click or a bounding box, SAM 3 introduces a powerful new capability: text-powered segmentation. This means you can now simply describe an object, and the AI will find it.

Traditional AI models have often been limited to a fixed set of simple labels like “car” or “person.” SAM 3 shatters this limitation, understanding detailed, specific descriptions.

This precision opens up a world of practical applications. Meta confirmed it is already integrating SAM 3 into its own products. Soon, creators using the Edits video app will be able to apply effects to specific people or objects. New creation experiences powered by SAM 3 are also coming to Vibes on the Meta AI app and meta.ai.

SAM 3D Creates 3D Models from a Single Image

SAM 3D may be the most significant update, offering the ability to generate a 3D reconstruction from only one 2D image. A task that once required several photos or specialized tools is quickly becoming accessible to everyday users.

The SAM 3D suite consists of two open-source models:

- SAM 3D Objects: For reconstructing objects and scenes.

- SAM 3D Body: For estimating human body shape and pose.

Meta says SAM 3D Objects “significantly outperforms existing methods,” positioning it as a meaningful step forward in both accuracy and stability.

The potential reaches further than creative experimentation. SAM 3D could support developments in areas such as robotics, research, and sports medicine. For most people, the impact will show up in day-to-day use. Meta has already integrated the technology into Facebook Marketplace through a “View in Room” feature that helps shoppers preview furniture in their homes before purchasing.

Explore the Future on the Segment Anything Playground

You don’t need to be a tech expert to experiment with these powerful tools. Meta has launched the Segment Anything Playground, a user-friendly platform where anyone can test SAM 3 and SAM 3D.

Users can upload their own images or videos and start experimenting. Prompt SAM 3 with text to isolate objects, or use SAM 3D to generate a 3D view of a scene, rearrange it virtually, or add 3D effects. The platform also offers templates for both practical tasks (like blurring faces or license plates) and fun video edits (like adding motion trails or spotlight effects).

In a move welcomed by the research community, Meta is also open-sourcing key components of this release. For SAM 3, this includes the model weights and a new benchmark dataset. For SAM 3D, the company is sharing model checkpoints and code, along with a novel, more rigorous benchmark for 3D reconstruction designed to push the entire field forward.

Maybe you would like other interesting articles?