On October 19, Amazon Web Services suffered a DNS failure in its DynamoDB API, disrupting traffic through its US-EAST-1 region in Northern Virginia. The outage caused widespread slowdowns and exposed the internet’s dependence on AWS.

Amazon later identified the problem as a software bug in its DNS management system. A timing error, known as a “race condition,” caused an outdated DNS entry to overwrite the current configuration. As a result, the IP addresses for a key regional endpoint were wiped, cutting off access to DynamoDB.

Once DynamoDB went down, the problems spread fast. Services like Lambda, Fargate, and Redshift, all of which rely on DynamoDB to handle things like login and data, started acting up, too. It took engineers around 11 hours to get the main issue under control, but nearly 16 hours before everything was fully back online.

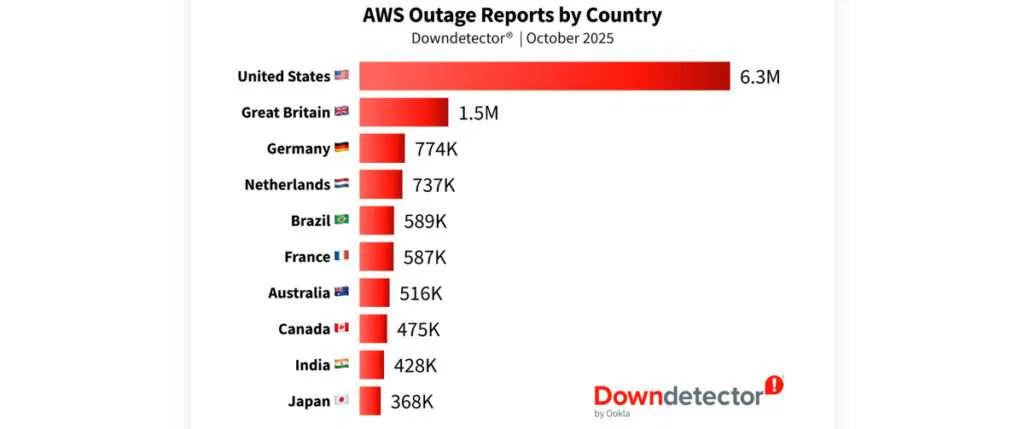

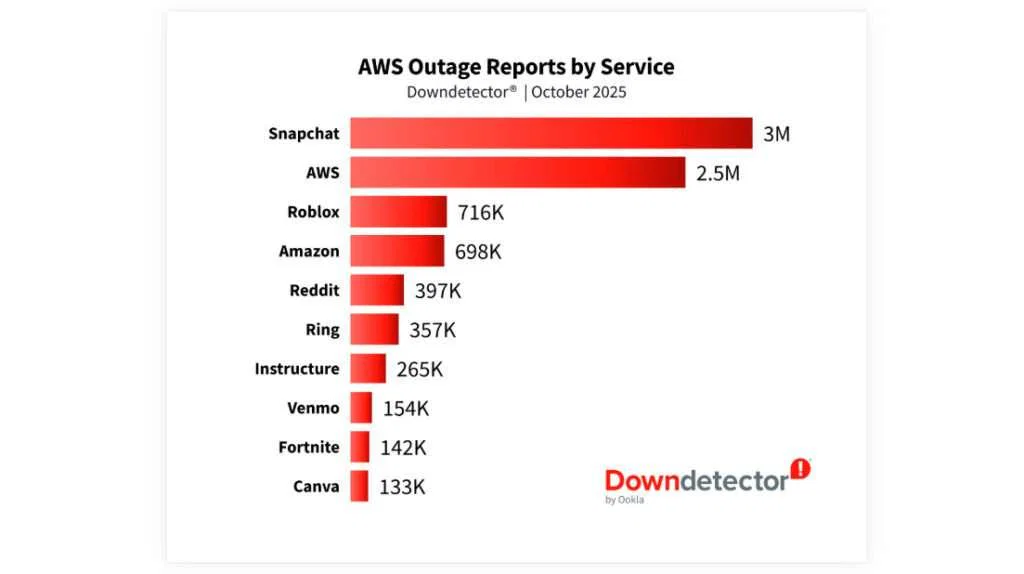

According to data from Ookla’s Downdetector, more than 17 million user reports poured in from 60 countries, including over six million from the United States. Popular platforms such as Snapchat, Roblox, and Reddit were hit, as were Amazon’s own retail and Ring services. The ripple effects also reached government websites, financial systems, and online education platforms.

For AWS, all roads lead back to one place: US-EAST-1. It’s the company’s oldest, busiest, and most essential region, the hidden machinery behind much of the modern web. When that hub faltered, the shockwave spread far beyond Amazon’s servers. Services that seemed entirely separate began to stumble, revealing just how tightly the digital world is woven together.

Amazon says it’s turned off the faulty DNS automation that caused the DynamoDB issue and is rebuilding the system to make sure it doesn’t happen again. But the incident makes one thing clear: no amount of backup hardware helps if the software itself breaks. One bad line of code can still take down half the internet before anyone can react.

Although such incidents can’t be completely avoided, they offer valuable lessons. Industry experts advise cloud providers to strengthen resilience through multi-region setups, diversified dependencies, and full-scale disaster simulations that mimic regional failures. Customers, meanwhile, are encouraged to design their applications to degrade gracefully, maintaining essential functions even during partial outages, to help preserve user confidence.

At a broader level, the incident highlights the extent to which cloud infrastructure has become a critical layer of global connectivity, supporting everything from e-commerce to public administration. As regulatory efforts in the EU and UK gain momentum, this failure will likely add pressure for enhanced transparency and stronger governance of core cloud operations.

Maybe you would like other interesting articles?