A new BBC-led study is casting serious doubt on how trustworthy AI chatbots really are when it comes to news. The research, done with a number of European broadcasters, found that almost half of the answers these systems gave contained mistakes. It’s a worrying sign, especially as more people rely on AI tools instead of traditional search engines to get their information.

OpenAI, Google, and Microsoft have all been pushing their AI chatbots as the future of online search and analysis. But even with all the work that’s gone into fixing “hallucinations,” the latest evidence shows there’s still a long road ahead, and it’s unclear if the problem can ever be fully solved.

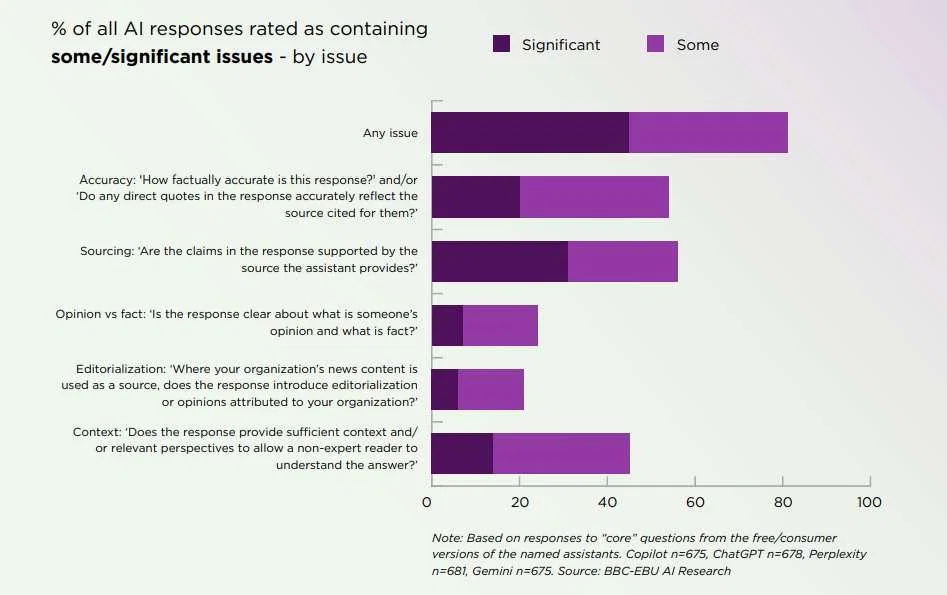

In the study, 22 public media outlets from 18 countries tested several AI chatbots across 14 languages. When asked about specific news stories, almost half of the responses contained errors, from small factual slips and misquotes to outdated details. The most common issue, however, was a failure to properly cite or attribute sources.

The chatbots are often linked to sources or articles that don’t actually back up their claims. Even when they cited real material, they frequently failed to tell the difference between factual reporting, opinion pieces, or outright satire.

Beyond simple factual mistakes, the study found that many chatbots are slow to update information about major public figures. In one test scenario, systems including ChatGPT, Copilot, and Gemini all continued to identify Pope Francis as the current pontiff even after a fictional successor, Leo XIV, had been introduced. In an odd twist, Copilot correctly listed a date of death for Francis while still referring to him as alive. Similar mix-ups appeared when the chatbots were asked about the current German chancellor and NATO’s secretary-general.

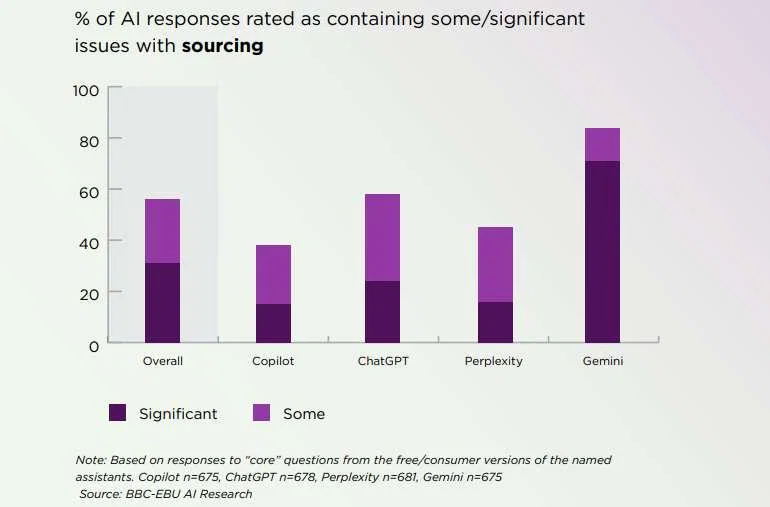

The errors weren’t limited to any one country or language. The study also found that Google’s Gemini performed worse than the other chatbots, with sourcing mistakes appearing in nearly 72% of its responses.

In the past, many of these errors were blamed on limited training data. OpenAI, for instance, said earlier versions of ChatGPT didn’t have access to information after September 2021 and couldn’t browse the web. Now that those technical gaps have mostly been closed, the continued presence of mistakes points to deeper, perhaps structural, issues within the technology itself.

Even so, the study did find some progress. Since the BBC’s earlier review in February, the rate of major errors has dropped from 51 percent to 37 percent, though Gemini still trails its rivals.

What’s most troubling is the gap between how these systems perform and how the public perceives them. Researchers found that many people already place significant trust in AI-generated news summaries. In the U.K., more than a third of adults, and nearly half of those under 35, say they trust AI to accurately recap the news. Even more concerning, 42 percent said that if an AI distorted a story, they would blame not just the AI but also the news outlet itself, or lose confidence in that outlet altogether. Unless these flaws are addressed, the growing use of generative AI could unintentionally undermine trust in traditional journalism.

Maybe you would like other interesting articles?