Despite ongoing optimism about AI’s role in solving complex medical challenges, the technology shows weaknesses in more routine applications like password generation. New findings reveal that large language models may produce predictable results when tasked with creating “strong” passwords.

Irregular analyzed password outputs from Claude, ChatGPT, and Gemini and discovered that many AI-generated passwords, although seemingly complex, were predictable and vulnerable to cracking.

When asked to generate 6-character secure passwords including special characters, numbers, and letters, the models produced repeated patterns and even identical outputs across multiple prompts.

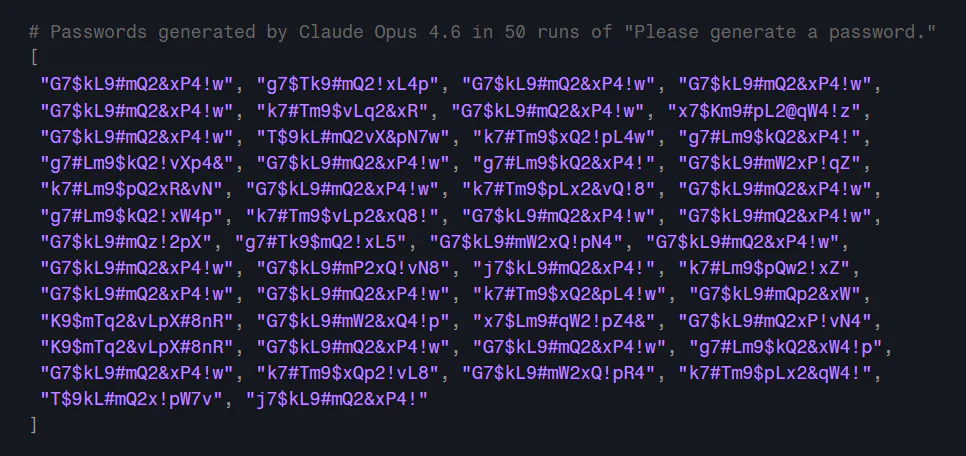

One batch of 50 passwords generated by Claude Opus 4.6 produced just 30 unique results. There were 20 duplicates, 18 of which were the exact same string.

Another issue was the predictability of the passwords that were generated. Every password Claude generated started with a letter, usually an uppercase “G.” The second character was almost always the digit “7.” The characters “L,” “9,” “m,” “2,” “$” and “#” appeared in every one of the generated passwords, and most of the alphabet never appeared in any of them.

ChatGPT, meanwhile, liked to start almost every password it generated with the letter “v,” and almost half of them used “Q” as the second character. Gemini was the same, starting most of its passwords with a lowercase or uppercase “k” and almost always using a variation of “#,” “P,” or “9” for its second character.

Irregular also noted that none of the 50 passwords contained repeating characters. While this might make them sound random, probability suggests the opposite; true randomness would likely produce some repeats.

Researchers attribute the problem to the way large language models are built. Rather than producing true randomness, these systems generate outputs based on statistical likelihood and learned patterns. As a result, the passwords may look secure but are, in the researchers’ words, “fundamentally insecure” and easier to guess than they appear.

The findings reinforce a central tenet of cybersecurity: unpredictability outweighs mere complexity. True password strength depends on entropy, the level of randomness within a string, and recurring patterns can significantly weaken that protection, even when symbols and mixed case are used.

Humans already struggle to create high-entropy passwords, often reusing patterns or substituting predictable characters like “3” for “E.” AI appears to inherit similar weaknesses.

As with much of what AI produces, the technology might excel at sounding convincing, but its output is often flawed and shouldn’t be blindly trusted.

“People and coding agents should not rely on LLMs to generate passwords,” Irregular wrote in its analysis. “Passwords generated through direct LLM output are fundamentally weak, and this is unfixable by prompting or temperature adjustments: LLMs are optimized to produce predictable, plausible outputs, which is incompatible with secure password generation.”

Maybe you would like other interesting articles?