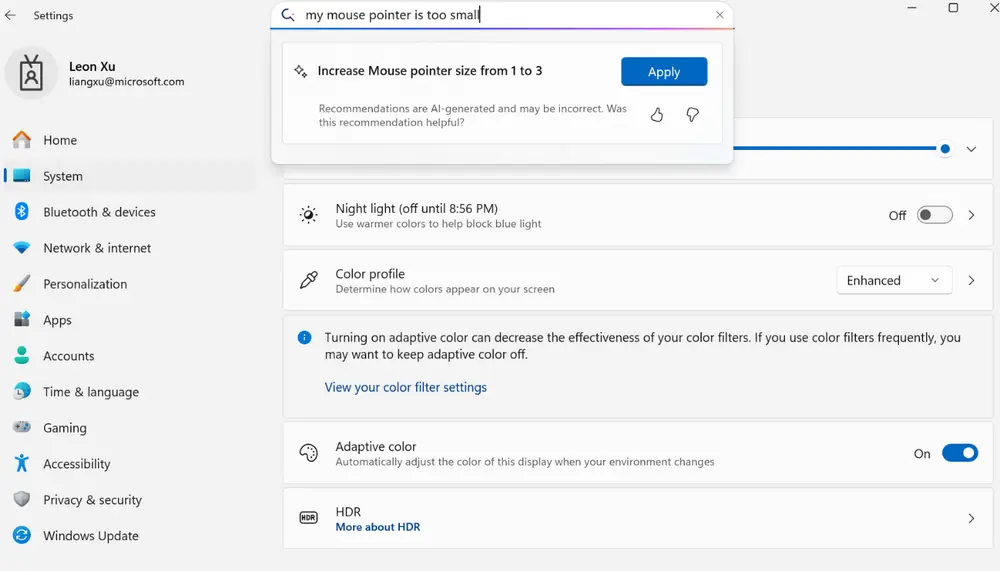

Microsoft is thrilled to unveil its latest on-device small language model, Mu, a breakthrough designed to enhance user experience right on your device. This compact yet powerful model is tailored to handle complex input-output relationships efficiently, running smoothly locally without relying on the cloud. Currently, Mu powers the intelligent agent in Windows Settings, accessible to Windows Insiders in the Dev Channel with Copilot+ PCs. It seamlessly maps natural language queries to corresponding Settings functions, making your interactions smarter and more intuitive.

One of Mu’s standout features is that it’s fully offloaded onto the Neural Processing Unit (NPU), enabling lightning-fast responses of over 100 tokens per second. This ensures the agent in Settings delivers high performance while keeping latency minimal, crucial for a smooth user experience. In this article, we’ll dive into how Mu was designed, trained, and fine-tuned to create a smarter, more efficient Settings agent.

Table of Contents

- What Makes Mu Special?

- The Tech Behind the Mu

- Packing Power into a Tiny Package

- Training a Tiny Giant

- Making It Run Lightning-Fast

- The Settings Agent in Action

- Real-World Performance

- Challenges and Solutions

- What’s Next?

What Makes Mu Special?

Here’s the thing about Mu – it’s designed to handle those tricky scenarios where you need to figure out complex relationships between what you ask for and what actually happens. Think of it as the brain behind that new AI agent in Windows Settings that can understand your natural language requests and translate them into actual system changes.

Mu runs entirely on your device’s Neural Processing Unit (NPU), churning out responses at over 100 tokens per second. That’s fast enough to keep up with your typing and thinking, which is exactly what you want from a Settings assistant.

The Tech Behind the Mu

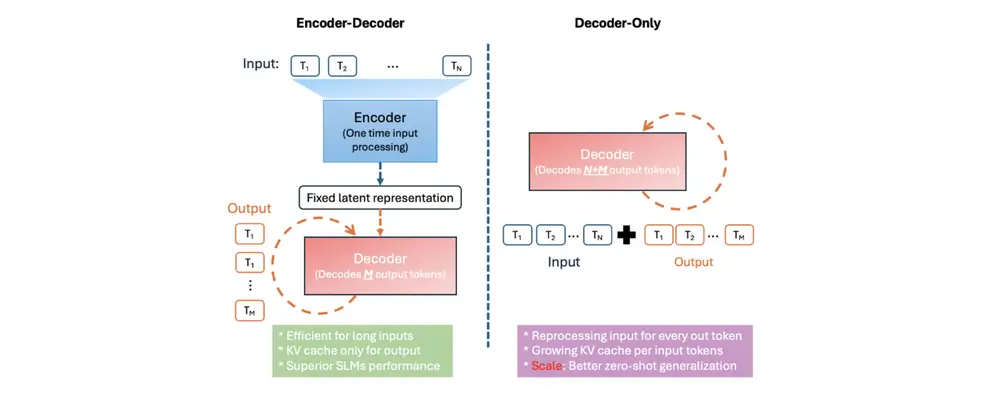

Mu isn’t just another language model – it’s a carefully crafted 330-million parameter encoder-decoder model built specifically for NPUs and edge devices. While that might sound technical, here’s what it means for you: it’s incredibly efficient.

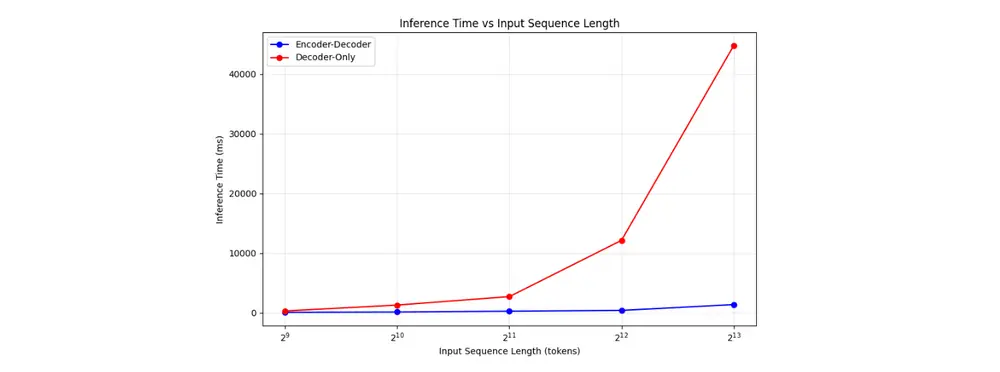

The encoder-decoder architecture is where Mu really shines. Instead of processing your entire input plus output sequence every time (like traditional models do), Mu encodes your input once and reuses that representation. It’s like having a really good translator who remembers what you said instead of asking you to repeat everything.

This design choice pays off big time. When tested on Qualcomm’s Hexagon NPU, Mu showed 47% lower first-token latency and 4.7× higher decoding speed compared to similar-sized decoder-only models. Those aren’t just impressive numbers – they translate to a noticeably snappier user experience.

Packing Power into a Tiny Package

Microsoft didn’t just make Mu small – they made it smart-small. The model incorporates three key upgrades that maximize performance within its compact size:

Dual LayerNorm keeps everything stable during training by normalizing data both before and after each processing layer. It’s like having quality control at multiple checkpoints.

Rotary Positional Embeddings (RoPE) help Mu understand context and relationships in longer text sequences, even beyond what it saw during training. This is crucial for handling complex Settings queries.

Grouped-Query Attention (GQA) reduces memory usage and speeds up processing by sharing certain components across attention groups while maintaining the model’s ability to focus on different aspects of your query.

Training a Tiny Giant

Building Mu wasn’t a simple process. Microsoft started with pre-training on hundreds of billions of high-quality educational tokens using Azure Machine Learning and A100 GPUs. Then came the clever part – they used knowledge distillation from their larger Phi models to pack more intelligence into Mu’s smaller frame.

The result? A base model that performs remarkably well across various tasks, but really shines when fine-tuned for specific applications like the Windows Settings agent.

Making It Run Lightning-Fast

To get Mu running smoothly on your device, Microsoft applied some serious optimization magic. They used Post-Training Quantization (PTQ) to convert the model from floating-point to more efficient integer representations, primarily 8-bit and 16-bit formats.

But here’s what’s really impressive – Microsoft worked directly with AMD, Intel, and Qualcomm to ensure Mu’s operations were perfectly optimized for each company’s NPU hardware. The result? Over 200 tokens per second on devices like the Surface Laptop 7, with ultra-fast response times even when processing large amounts of context.

The Settings Agent in Action

Now, let’s talk about why Mu exists in the first place – making Windows Settings actually usable. Anyone who’s tried to find a specific setting in Windows knows the pain of clicking through endless menus and submenus.

Microsoft’s solution was elegant: build an AI agent that understands natural language and can directly execute setting changes. But here’s the challenge – it needed to be fast enough for real-time use and accurate enough to avoid frustrating users.

Initially, Microsoft tried using Phi with LoRA fine-tuning, which met their accuracy goals but was too large for the latency requirements. Mu, with its compact design, was perfect for the job but needed extensive fine-tuning.

The training process was intensive. Microsoft scaled up to 3.6 million training samples (1,300 times more than their initial dataset) and expanded coverage from about 50 settings to hundreds. They used synthetic data generation, prompt tuning, diverse phrasing techniques, and smart sampling to create a robust training dataset.

| Task | Model | Fine-tuned Mu | Fine-tuned Phi |

| SQUAD | 0.692 | 0.846 |

| CodeXGlue | 0.934 | 0.930 |

| Settings Agent | 0.738 | 0.815 |

Real-World Performance

The results speak for themselves. The fine-tuned Mu model achieves response times under 500 milliseconds while maintaining high accuracy across hundreds of Windows settings. It works best with multi-word queries that clearly express intent, so “increase screen brightness” works better than just “brightness.”

Microsoft was smart about handling ambiguous queries, too. Short, unclear requests still get traditional search results, while clear multi-word queries trigger the AI agent for precise, actionable responses.

Challenges and Solutions

One interesting challenge Microsoft faced was dealing with overlapping functionalities. Take “increase brightness” – if you have dual monitors, which screen should get brighter? Microsoft addressed this by prioritizing the most commonly used settings and refining its training data accordingly.

They also had to balance precision with coverage. The model performs best on clear, multi-word queries, so they integrated it smartly with the existing Settings search to handle different types of user input appropriately.

What’s Next?

Microsoft is actively seeking feedback from Windows Insiders to refine the Settings agent experience. This is just the beginning – Mu represents a new approach to on-device AI that could expand to other areas of Windows and beyond.

The combination of efficient architecture, hardware-specific optimization, and task-specific fine-tuning makes Mu a compelling example of how AI can enhance user experience without requiring cloud connectivity or compromising privacy.

For Windows users, this means a more intuitive way to interact with system settings. For the broader tech community, Mu demonstrates how purpose-built, efficient AI models can deliver impressive performance in resource-constrained environments.

As Microsoft continues to refine this technology based on user feedback, we can expect even more sophisticated on-device AI experiences that make our computers more helpful and easier to use.

Maybe you like other interesting articles?