As competition over artificial intelligence intensifies, OpenAI is offering a salary of more than $500,000 for an executive role focused exclusively on preventing the most serious risks associated with its technology.

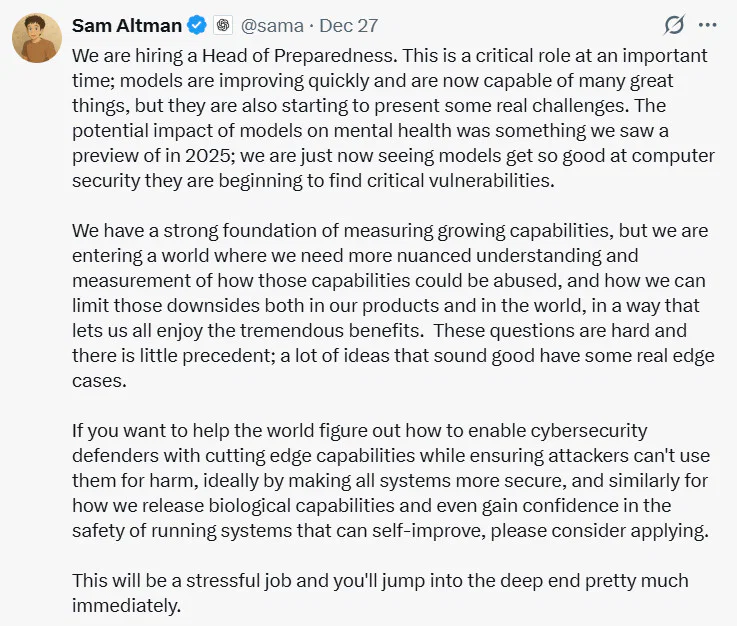

The listing for a “Head of Preparedness” role offers a base salary of $555,000, plus equity. OpenAI chief executive Sam Altman promoted the opening on social media, cautioning that the job would be “stressful” and that whoever lands it would be “jumping into the deep end pretty much immediately.”

“This is a critical role at an important time,” Altman wrote in an X post on Saturday. He outlined the escalating challenges, noting that while AI models are “capable of many great things,” they are “also starting to present some real challenges.”

Altman highlighted an early “preview” of AI’s impact on mental health and cautioned that today’s models are becoming capable of identifying critical computer security vulnerabilities, a dual-use development with both defensive and offensive implications.

The job description puts those worries into writing, spelling out the risks the executive would be expected to tackle, including large-scale job losses, advanced misinformation campaigns, abuse by malicious actors, environmental impacts, and what it calls the “erosion of human agency.”

That kind of money suggests OpenAI sees the risks as real and urgent. At the same time, the company is under mounting pressure from critics who argue it has sidelined safety concerns in the race to build and sell products like ChatGPT.

OpenAI’s stated mission is to build artificial intelligence “that benefits all of humanity,” with safety embedded as a founding principle. Yet former employees have publicly accused the company of drifting away from that goal.

Jan Leike, who previously co-led OpenAI’s now-disbanded Superalignment safety team, resigned in May 2024, saying that the company’s “safety culture and processes have taken a backseat to shiny products.”

“Building smarter-than-human machines is an inherently dangerous endeavor,” Leike wrote. “OpenAI is shouldering an enormous responsibility on behalf of all of humanity.”

Just days after his resignation, another researcher, Daniel Kokotajlo, also left. He said he no longer trusted the company to behave responsibly as it gets closer to AGI, AI that can rival or surpass human reasoning. Kokotajlo later told Fortune that the departures had shrunk the AGI safety team from about 30 people to nearly half.

The new Head of Preparedness will be responsible for rebuilding and strengthening OpenAI’s safety infrastructure. Positioned within the Safety Systems team, the role centers on developing capability evaluations, threat models, and mitigation strategies to create what the company describes as a coherent, rigorous, and scalable safety pipeline.

The position was previously held by Aleksander Madry, who moved to a new role within the company in July 2024.

The hiring push comes as OpenAI has acknowledged real-world harms linked to its products. In some cases, ChatGPT’s rise as a conversational companion has worsened mental health issues, including instances where the chatbot reinforced delusions or harmful behavior. In October, the company said it was working with mental health professionals to improve how the system responds to users showing signs of psychosis or self-harm.

Maybe you would like other interesting articles?